AWS autoscale problems and Terminators are morons.

December 17, 2012

Recently in discussion I noted several admins and managers complain that AWS autoscale is not really cutting it for them. Some of the issues reported were

- The autoscale takes time to respond to unexpected surge in traffic thereby causing intermittent downtimes for several users.

- The scale-down or scaling-in causes severe issues and web app errors are thrown.

Other reported problems are actually similar to the two above. Some suggestions to handle the issue and understanding how auto scale works will help.

Before going any further, let me remind you: Autoscale is slow process. It is not designed to help in “sudden” and “unexpected” surge in traffic. That being said it is doable because “unexpected” here is human component. Just because Amazon AWS made autoscale you cannot throw capacity planning out the window.

You need to ask these questions and delve into your autoscale setup in detail.

1. How do you set your triggers? You can set triggers based on time. For e.g. if you expect every Friday to be a high traffic day don’t use CPU as an indicator. Just make pre-launch instances from Friday, automate it and forget it. Shut them down when traffic reached near lows. If you are using CPU or other metric it is important to look at two factors. The threshold metric for e.g. CPU and the time range. A lot of it is trial and error and depends on your usage scenario. I will take one example here. I expect my 3 web app instances to generally stay between 60-70% CPU aggregate througout the day and no more than 15 minutes on anything above 75% which is possible when deploying large OS updates and app updates. If I set my time range to 30 minutes and CPU Threshold  to 75% it would sound logical but it is not. Traffic does not work that way and neither does averages. If you get sudden traffic spike then it cannot be helped already since we always keep only 25% additional resources. The problem is also miscalculated easily because of the aggregate CPU measure.

Spikes are handled with what you have NOW not what you can have 15 minutes from now. Therefore try to keep your permanent instance count enough to be at 40% or less average on a regular traffic basis or at 50% of peak (unexpected) traffic you have seen. Therefore by doing this alone you have made your CPU threshold trigger more pronounced and also the time needed to judge whether additional resources is required.

It would seem appropriate to set 60% as the threshold but we need the time range.

The way CPU aggregate is being calculated is average over a time range. e.g. 300 seconds or 5 minutes. A sample taken every second would add to the total and get divided by the 300 seconds.

Therefore if you had 40% CPU first second followed by 100% cpu for the next 299 seconds, Autoscale would not trigger until the 301st second or thereafter. Do you see the problem here? Which is why it is important to anticipate as much peak traffic as you can and prepare in advance. End of the day you have to find something that fits your cost so keeping your own allowances in mind for finances here is what you can additionally look to factor in.

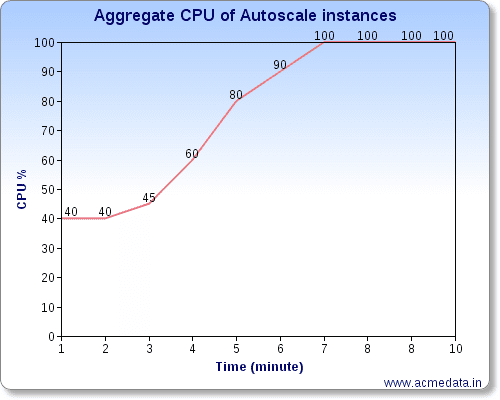

Estimate your time factor and CPU threshold to be at the point the traffic is building towards “Peak”. Using the above example of my case of 40% average

[caption id=“attachment_89” align=“aligncenter” width=“500”]

aws autoscale threshold[/caption]

aws autoscale threshold[/caption]

In the graph above we need to be able to launch an instance at 70% or the 4.5 Minute mark ideally. But no way to know that the very moment is indeed traffic or just a yum update check. Allowing for the benefit of doubt we should still have a CPU running at the 100 % CPU and give it time to attach to the ELB and start serving requests. let’s say that time is 3 minutes. I have simplified the graph for calculation using absolute numbers and will simplify the calculation to arithmetic instead of Integral effing calculus because nothing should be that complicated in real life for us.

I develop two Scale-up policies. First one uses a time range of 15 minutes and looks for aggregate of 70% to launch additional instance. This is to keep to my below 50% mark at all times. This is straightforward. We do this to ensure that our CPU is not normally at high usage and be able to cover some surge in traffic without waiting for additional instances.

I make a second policy which looks for 3 minute mark or 5 minutes. Noobie admins will say “5 minutes should be 80%” but now you know that this is obviously incorrect. The average of 5 minutes in our case is 40+40+45+60+80 / 5 = 53% (assuming per minute sample) Detailed monitoring gives you per minute sampling which is why your autoscale instances are enabled for detailed monitoring whether you like it or not. This is my theory. Therefore if you were to set 60% threshold and 5 minute duration for additional Instance you should be safe from two different perspectives. Either policy “one” would have launched an instance maintaining your CPU below 50% or policy two would have. Either of them will satisfy both criteria and you will not be seeing twice the instances.

All you have to do is ensure that you are planning “in-advance”. Autoscale is not magic. If your company’s marketing is running a campaign that predicts a “a large frenzied sale surge” despite their failings so far you should nevertheless be prepared and launch additional instances as permanent for that time period. Note here: when I say manually launch you have manually added the instances to ELB and want to keep it attached, don’t modify the Autoscale policies to do so.

2. Termination, scaling -in or scaling-down

This is rather tricky. Fact outside the control of Amazon or any provider is that when you shutdown any system it does not really care about the state of the User’s of the application unless you choose to program that in some way. For example when Windows server warns that users are connected to the Terminal Server. For web application this is left to us to decide. Therefore once you accept that connections (active) at the time of termination will exit with errors for that particular instance, the question is what is acceptable error rate. If say you choose to remove instances at 10% aggregate CPU so you are back to 30% to 40%, how many users are affected and in what way. If 10% means 1 million users who are posting memes then it is something you have to decide. If 10% means its just 10 users but they are involved in commercial transaction worth thousands or millions of dollars then the problem is not about numbers. One way to do this is handle errors as gracefully as possible. For example if you use AJAX based user registrations you can display a message “Please resubmit the form, an unknown error has occurred on our end” or if your’s a streaming app you could try delayed reconnect. Something along those lines.  If the Terminated Instance is removed from ELB then the subsequent request should direct to an online Instance. Thusly averting the much unwanted management trial room hassles where you have chosen benefit-of-the-doubt (“wtf are you talking about, looks fine to me -.- ”) instead of the long rather unwieldy technical explanation which may be ill-construed as another lazy excuse (“well it begins like this… you see on Abhishek’s blog..and hence we can do nothing about those.. :( ” ).

If at all possible I will avoid too many Terminations or scale down.  I would prefer to maintain the equipment that supports any unhinged marketing campaigns or random Reddit front page for entirely irrelevant reasons.

Speaking of termination…”I’ll be back”