Quick and dirty intro to Fabric and AWS integration for automation

December 18, 2012

Despite most my work involving PHP setup I have found python to be the most useful tool for a whole bunch of supporting tasks. One of them is to run commands or deploy packages to Amazon EC2 instances. For really large setup this is a very cool way to get the job done. Imagine that you have 10-12 servers and autoscaling tends to change the numbers of servers every now and then. Let’s say you wanted to git update all the servers with the latest copy of the code or restart a process. You can do this with one command SSH. Yes but how on all the servers? So you search for “parallel SSH”. Seems all fine and dandy till you realize you still need to list all the hostnames. “Parallel SSH, why you no just read my mind”. We are going to make something like parallel SSH really quickly that works on AWS and easy to do whatever you want it to.

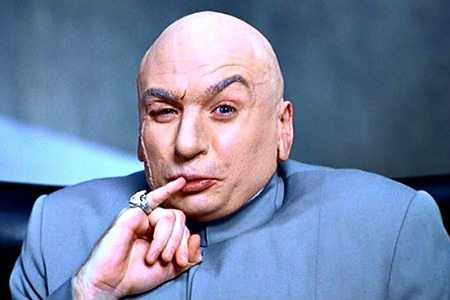

However this SSH is, well cross platform I suppose all you need to do is be able to run Python (2.5 or higher). I am not going into in-depth details. I want to show you how you can do this yourself, make you a believer. Then ofcourse I recommend you do further reading. There is a lot of literature out there but no working examples that does what I am showing you here. Once you get the idea, you will be unstoppable crazy lunatic and be quite pleased with your megalomaniac self. Back to reality…

Prepare

Fabric: prepare your python install by installing this package.

Boto: Next you need the Boto packages for Python. Install that too.

Get your AWS security keys with atleast the permission to Read information about ALL EC2 instances. You don’t need anymore if you just want to SSH into the systems.

Also prepare your SSH key ofcourse.  Place it anywhere and now you can begin writing some code.

There are two parts to this.

1st part:

Use Boto to choose your EC2 Instances.

All instances have some attributes. Plus good Devops always Tag their instances… you tagged your instance didn’t you? Well no matter.

Code below (ignore fabric references, we’ll get to that in a bit)

fabfile.py (name fabfile.py is important or it won’t work)

import botofrom fabric.api import env, run, parallelAWS\_ACCESS\_KEY\_ID = 'GET\_YOUR\_OWN'AWS\_SECRET\_ACCESS\_KEY = 'GET\_TO\_DAT\_CHOPPA'def set\_hosts():from boto.ec2.connection import EC2Connectionec2conn = EC2Connection(AWS\_ACCESS\_KEY\_ID,AWS\_SECRET\_ACCESS\_KEY)i = \[\]for reservation in ec2conn.get\_all\_instances(filters={'key-name' : 'privatekey','instance-state-name' : 'running','root-device-type': 'instance-store'}):#print reservation.instances (for debug)for host in reservation.instances:# build the user@hostname string for ssh to be used lateri.append('USERNAME@'+str(host.public\_dns\_name))return ienv.key\_filename = \['/path/to/key/privatekey.pem'\]env.hosts = set\_hosts()

Quick explanation: I have used a couple of filters above. Clearly outlined as the keyword  “filters” in code above. Here I chose to filter by Private Keyname for a bunch of servers that are in the state “running” (always good to have) and the root-device-type is instance-store. Now you see if you had tagged your servers, the key/value filter would be like this

> {'tag:Role' : 'WobblyWebFrontend'}

You can use the reference here to find more filters. We are basically filtering by Instance metadata or what you would get from ec2 describe command. You can even use autoscale group name. The idea is for you to select the servers you want to run a particular command on, so that is left to you. “I can only show you the code, you must debug it on your own”. lulz 2nd part:

Now that assuming I am right, you have chosen all the servers that you need to run your command on, we are going to write those commands in the same file.

Notice that the function set_hosts returns a list of hostnames. Thats all we needed.

continued…fabfile.py

@parallel def uptime(): run(‘uptime’,env.hosts)

…and we are done with coding. No really.

Cd in to the directory where you saved fabfile.py . Run the program like so.

\$fab uptime

Satisfying splurge of output on the command line follows…

No wait! What happened here? When you invoke the command “fab” fabric looks for the fabfile.py and runs the function that matches the first argument. So you can keep writing multiple function for say “svn checkout”, “wget” or “shutdown now”  whatever.

The @parallel decorator before the function, tells fabric to execute the command “SIMULFKINGTANEOUSLY” on all servers. That is your parallel SSH.

May the force be with you. Although I know the look on your face right now.